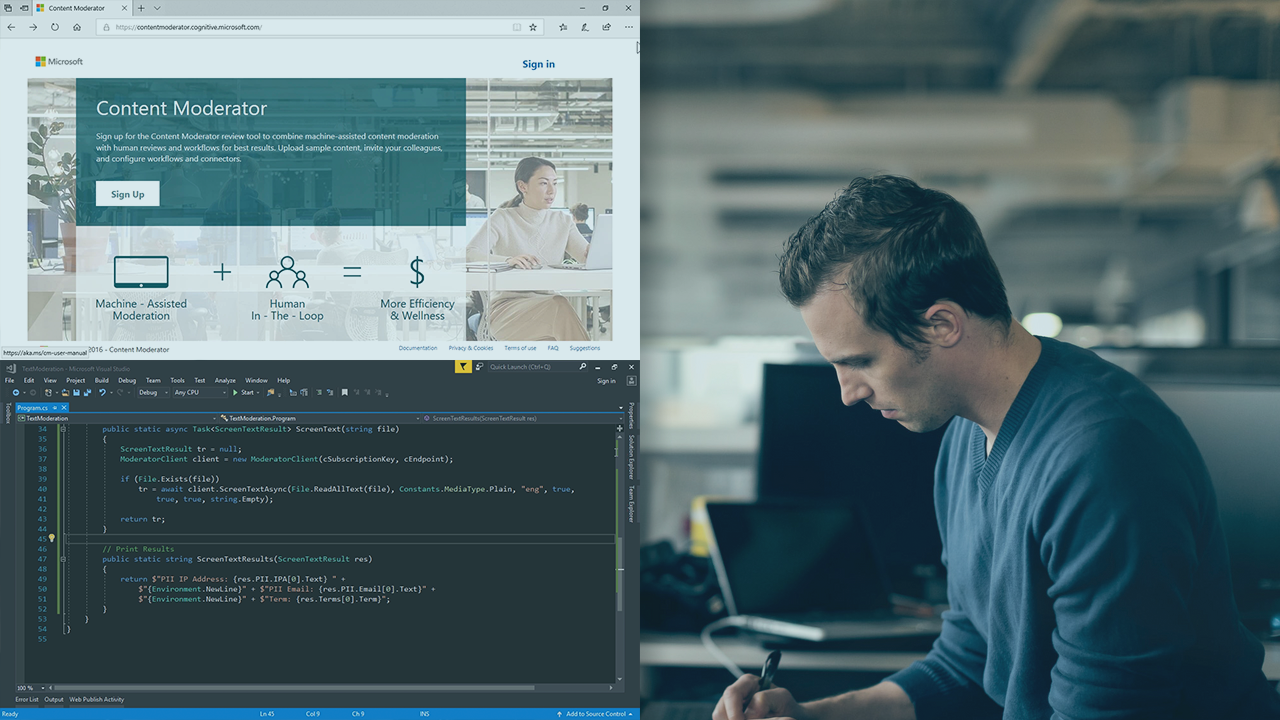

The Cognitive services Content Moderator is a very useful tool to find explicit or offensive content in images, videos and text. It also supports custom image and text lists to block or allow matching content and can also be used as a feedback tool to assist human moderators. In this tutorial we are going to use the api to find objectionable content in images. To use the Content Moderator with Text check out this tutorial.

The service response includes the following information:

- Profanity: term-based matching with built-in list of profane terms in various languages

- Classification: machine-assisted classification into three categories

- Personally Identifiable Information (PII)

- Auto-corrected text

- Original text

- Language

Prerequisites

- To run the sample code you must have an edition of Visual Studio installed.

- You will need the Microsoft.Azure.CognitiveServices.ContentModerator NuGet package.

- You will need the Microsoft.Rest.ClientRuntime NuGet package.

- You will need the Newtonsoft.Json NuGet package.

- You will need an Azure Cognitive Services key. Follow this tutorial to get one. If you don’t have an Azure account, you can use the free trial to get a subscription key.

Create the Project

- Create a .NET Core Console Application in Visual Studio 2017

- Add the Microsoft.Azure.CognitiveServices.ContentModerator NuGet package by using the NuGet Package Manager Console.

Install-Package Microsoft.Azure.CognitiveServices.ContentModerator

- Add the Microsoft.Rest.ClientRuntime NuGet package by using the NuGet Package Manager Console.

Install-Package Microsoft.Rest.ClientRuntime

- Add the Newtonsoft.Json NuGet package by using the NuGet Package Manager Console.

Install-Package Newtonsoft.Json

- Create a new class and name it Clients. Add the following code:

using System; using System.Collections.Generic; using System.Text; using Microsoft.Azure.CognitiveServices.ContentModerator; using Newtonsoft.Json; using System.IO; using System.Threading; namespace ContentModerator { public static class Clients { // The region/location for your Content Moderator account private static readonly string AzureRegion = "westeurope"; // The base URL fragment for Content Moderator calls. private static readonly string AzureBaseURL = $"https://{AzureRegion}.api.cognitive.microsoft.com"; // Your Content Moderator subscription key. private static readonly string CMSubscriptionKey = "ENTER KEY HERE"; // Returns a new Content Moderator client for your subscription. public static ContentModeratorClient NewClient() { // Create and initialize an instance of the Content Moderator API wrapper. ContentModeratorClient client = new ContentModeratorClient(new ApiKeyServiceClientCredentials(CMSubscriptionKey)); client.Endpoint = AzureBaseURL; return client; } } } - Replace in the above code the Azure Region and the Content Moderator Key from the Azure PortalCreate a new class and name it EvaluateData

public class EvaluationData { public string ImageUrl { get; set; } public Evaluate ImageModeration { get; set; } public OCR TextDetection { get; set; } public FoundFaces FaceDetection { get; set; } } - Open the Program.cs file and add the following code to declare 2 sample images

public static string SampleImage1 = "http://codestories.gr/wp-content/uploads/2019/03/sample1.jpeg"; public static string SampleImage2 = "http://codestories.gr/wp-content/uploads/2019/03/sample2.jpg";

- Then add this function that does Image Moderation, Text Detection and Face Detection

private static EvaluationData EvaluateImage(ContentModeratorClient client, string imageUrl) { var url = new BodyModel("URL", imageUrl.Trim()); var imageData = new EvaluationData(); imageData.ImageUrl = url.Value; imageData.ImageModeration = client.ImageModeration.EvaluateUrlInput("application/json", url, true); Thread.Sleep(1000); imageData.TextDetection = client.ImageModeration.OCRUrlInput("eng", "application/json", url, true); Thread.Sleep(1000); imageData.FaceDetection = client.ImageModeration.FindFacesUrlInput("application/json", url, true); Thread.Sleep(1000); return imageData; } - Add the following code inside main to call the EvaluateImage function

List<EvaluationData> evaluationData = new List<EvaluationData>(); // Create an instance of the Content Moderator API wrapper. using (var client = Clients.NewClient()) { EvaluationData imageData = EvaluateImage(client, SampleImage1); evaluationData.Add(imageData); imageData = EvaluateImage(client, SampleImage2); evaluationData.Add(imageData); } Console.WriteLine(JsonConvert.SerializeObject( evaluationData, Formatting.Indented)); - Run the program to get the results

You can find the complete source code in my Github in this repository.

Analyzing the Results

Image Moderation

These values predict whether the image contains potential adult or racy content

isImageAdultClassified represents the potential presence of images that may be considered sexually explicit or adult in certain situations.

isImageRacyClassified represents the potential presence of images that may be considered sexually suggestive or mature in certain situations.

The scores are between 0 and 1. The higher the score, the higher the model is predicting that the category may be applicable. Below is the result from the Sample Image 1.

"ImageModeration": {

"CacheID": "97baaf0c-22d4-423a-9a6a-2eff3eb001cf_636874131499981731",

"Result": false,

"TrackingId": "WE_ibiza_c9ffa2cd-a5bf-4ab2-aa53-4f7019b55587_CognitiveServices.S0_d819dbfa-be02-4660-8fb8-b0a9b5b3110f",

"AdultClassificationScore": 0.0053093112073838711,

"IsImageAdultClassified": false,

"RacyClassificationScore": 0.019142752513289452,

"IsImageRacyClassified": false,

"AdvancedInfo": [{

"Key": "ImageDownloadTimeInMs",

"Value": "132"

},

{

"Key": "ImageSizeInBytes",

"Value": "302485"

}

],

"Status": {

"Code": 3000,

"Description": "OK",

"Exception": null

}

}

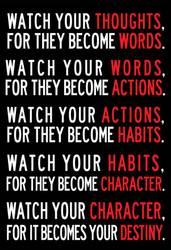

Text Detection

The Optical Character Recognition (OCR) operation predicts the presence of text content in an image and extracts it for text moderation, among other uses. You can specify the language. If you do not specify a language, the detection defaults to English.

- The original text.

- The detected text elements with their confidence scores.

Below you can see the result from Sample Image 2

"TextDetection": {

"Status": {

"Code": 3000,

"Description": "OK",

"Exception": null

},

"Metadata": [{

"Key": "ImageDownloadTimeInMs",

"Value": "101"

},

{

"Key": "ImageSizeInBytes",

"Value": "13904"

}

],

"TrackingId": "WE_ibiza_c9ffa2cd-a5bf-4ab2-aa53-4f7019b55587_CognitiveServices.S0_2d765129-b5ed-49e5-9e49-48b0465fd6da",

"CacheId": "020124f2-9c26-4ca8-8a5f-3acab66abb7a_636874131601685704",

"Language": "eng",

"Text": "WATCH YOUR THOUGHTS \r\nFOR THEY BECOME WORDS. \r\nWATCH YOUR WORDS \r\nACTIONS: \r\nFOR THEY BECOME \r\nWATCH YOUR ACTIONS \r\nHABITS: \r\nFOR THEY BECOME \r\nWATCH YOUR HABITS \r\nFOR \r\nWATCH YOUR CHARACTER. \r\nFORITBECHESYOURJESTINY. \r\n",

"Candidates": []

}

Face Detection

Detecting faces helps to detect personally identifiable information (PII) such as faces in the images. You detect potential faces and the number of potential faces in each image. The response includes this information:

- Faces count

- List of locations of faces detected

Below you can see the result from Sample Image 1

"FaceDetection": {

"Status": {

"Code": 3000,

"Description": "OK",

"Exception": null

},

"TrackingId": "WE_ibiza_c9ffa2cd-a5bf-4ab2-aa53-4f7019b55587_CognitiveServices.S0_d0c5447a-eba8-4538-81a9-edd1f47d8e55",

"CacheId": "abdc4991-9f09-4b9b-8537-faa3c5821022_636874131536855692",

"Result": true,

"Count": 4,

"AdvancedInfo": [{

"Key": "ImageDownloadTimeInMs",

"Value": "103"

},

{

"Key": "ImageSizeInBytes",

"Value": "302485"

}

],

"Faces": [{

"Bottom": 664,

"Left": 550,

"Right": 774,

"Top": 440

},

{

"Bottom": 620,

"Left": 858,

"Right": 1082,

"Top": 396

},

{

"Bottom": 521,

"Left": 1242,

"Right": 1421,

"Top": 342

},

{

"Bottom": 593,

"Left": 1512,

"Right": 1691,

"Top": 414

}

]

}

Complete Result from Sample Image 1

{

"ImageUrl": "http://codestories.gr/wp-content/uploads/2019/03/sample1.jpeg",

"ImageModeration": {

"CacheID": "97baaf0c-22d4-423a-9a6a-2eff3eb001cf_636874131499981731",

"Result": false,

"TrackingId": "WE_ibiza_c9ffa2cd-a5bf-4ab2-aa53-4f7019b55587_CognitiveServices.S0_d819dbfa-be02-4660-8fb8-b0a9b5b3110f",

"AdultClassificationScore": 0.0053093112073838711,

"IsImageAdultClassified": false,

"RacyClassificationScore": 0.019142752513289452,

"IsImageRacyClassified": false,

"AdvancedInfo": [{

"Key": "ImageDownloadTimeInMs",

"Value": "132"

},

{

"Key": "ImageSizeInBytes",

"Value": "302485"

}

],

"Status": {

"Code": 3000,

"Description": "OK",

"Exception": null

}

},

"TextDetection": {

"Status": {

"Code": 3000,

"Description": "OK",

"Exception": null

},

"Metadata": [{

"Key": "ImageDownloadTimeInMs",

"Value": "112"

},

{

"Key": "ImageSizeInBytes",

"Value": "302485"

}

],

"TrackingId": "WE_ibiza_c9ffa2cd-a5bf-4ab2-aa53-4f7019b55587_CognitiveServices.S0_16508aef-6cf7-4036-96ec-b23314c292c8",

"CacheId": "64cf30e4-f307-45c1-a7c9-ff5e522899bd_636874131522951088",

"Language": "eng",

"Text": "",

"Candidates": []

},

"FaceDetection": {

"Status": {

"Code": 3000,

"Description": "OK",

"Exception": null

},

"TrackingId": "WE_ibiza_c9ffa2cd-a5bf-4ab2-aa53-4f7019b55587_CognitiveServices.S0_d0c5447a-eba8-4538-81a9-edd1f47d8e55",

"CacheId": "abdc4991-9f09-4b9b-8537-faa3c5821022_636874131536855692",

"Result": true,

"Count": 4,

"AdvancedInfo": [{

"Key": "ImageDownloadTimeInMs",

"Value": "103"

},

{

"Key": "ImageSizeInBytes",

"Value": "302485"

}

],

"Faces": [{

"Bottom": 664,

"Left": 550,

"Right": 774,

"Top": 440

},

{

"Bottom": 620,

"Left": 858,

"Right": 1082,

"Top": 396

},

{

"Bottom": 521,

"Left": 1242,

"Right": 1421,

"Top": 342

},

{

"Bottom": 593,

"Left": 1512,

"Right": 1691,

"Top": 414

}

]

}

}

Complete Result from Sample Image 2

{

"ImageUrl": "http://codestories.gr/wp-content/uploads/2019/03/sample2.jpg",

"ImageModeration": {

"CacheID": "6e1d4007-83d5-4003-b8b8-5c5714e0125b_636874131583212179",

"Result": true,

"TrackingId": "WE_ibiza_c9ffa2cd-a5bf-4ab2-aa53-4f7019b55587_CognitiveServices.S0_18126a32-8e3a-4d99-beb2-49ccfddd1bf4",

"AdultClassificationScore": 0.39478206634521484,

"IsImageAdultClassified": false,

"RacyClassificationScore": 0.40202781558036804,

"IsImageRacyClassified": true,

"AdvancedInfo": [{

"Key": "ImageDownloadTimeInMs",

"Value": "4"

},

{

"Key": "ImageSizeInBytes",

"Value": "13904"

}

],

"Status": {

"Code": 3000,

"Description": "OK",

"Exception": null

}

},

"TextDetection": {

"Status": {

"Code": 3000,

"Description": "OK",

"Exception": null

},

"Metadata": [{

"Key": "ImageDownloadTimeInMs",

"Value": "101"

},

{

"Key": "ImageSizeInBytes",

"Value": "13904"

}

],

"TrackingId": "WE_ibiza_c9ffa2cd-a5bf-4ab2-aa53-4f7019b55587_CognitiveServices.S0_2d765129-b5ed-49e5-9e49-48b0465fd6da",

"CacheId": "020124f2-9c26-4ca8-8a5f-3acab66abb7a_636874131601685704",

"Language": "eng",

"Text": "WATCH YOUR THOUGHTS \r\nFOR THEY BECOME WORDS. \r\nWATCH YOUR WORDS \r\nACTIONS: \r\nFOR THEY BECOME \r\nWATCH YOUR ACTIONS \r\nHABITS: \r\nFOR THEY BECOME \r\nWATCH YOUR HABITS \r\nFOR \r\nWATCH YOUR CHARACTER. \r\nFORITBECHESYOURJESTINY. \r\n",

"Candidates": []

},

"FaceDetection": {

"Status": {

"Code": 3000,

"Description": "OK",

"Exception": null

},

"TrackingId": "WE_ibiza_c9ffa2cd-a5bf-4ab2-aa53-4f7019b55587_CognitiveServices.S0_cfee817f-2181-4e46-8f75-11911d0bbc3c",

"CacheId": "895e7e26-4730-4c22-809c-0dc28e962a9b_636874131614469089",

"Result": false,

"Count": 0,

"AdvancedInfo": [{

"Key": "ImageDownloadTimeInMs",

"Value": "109"

},

{

"Key": "ImageSizeInBytes",

"Value": "13904"

}

],

"Faces": []

}

}